Research on Networked Multimedia

≫ Japanese page

Networked Multimedia

|

Networked multimedia applications such as multimedia conferencing,

distance learning, collaborative work in networked virtual environments,

and networked games attract a great deal of attention. |

Collaborative Work with Haptic Media

|

We do collaborative work such as remote design and remote surgery simulation

by touching objects in a 3-D virtual space

with the haptic interface device

(SensAble Technologies, Inc.) as a haptic interface device.

We can expect that using haptic media as well as voice and video largely

improves the efficiency of collaborative work.

There are a number of studies which deal with collaborative work with haptic media. However, most of them deal with only one object in a 3-D virtual space. This study handles play with building blocks (i.e., objects) in which two users lift and move the blocks collaboratively to build a dollhouse in a 3-D virtual space (see the following figure). The dollhouse consists of 26 blocks. We deal with three cases of collaborative play. In one case, the two users carry the blocks by holding them together. In another case, the users carry out the blocks alternately by holding them separately. In the other case, one of the two users carries each block from a position and hands the block to the other of the two users, who receives it and then carries it to another position. By subjective and objective assessment, we investigate the influences of the network latency and packet loss on the collaborative play. In the following figure, the two users are piling up building blocks collaboratively to building the dollhouse by manipulating haptic interface devices.

In the near future, we plan to handle a variety of applications. |

Remote Control Systems with Haptic Media

|

We control a haptic interface device with another remote haptic interface device.

As such an application, we deal with remote haptic instruction systems (for example, a remote calligraphy system and a remote drawing instruction system) and remote haptic control systems.

The remote drawing instruction system enables navigation of brush stroke while a teacher and a student feel the sense of force interactively.

The following figure shows a remote haptic instruction system.

A whiteboard marker is attached to each haptic interface device stylus at the teacher and student terminals.

We can use the system to write characters and to draw figures while watching video.

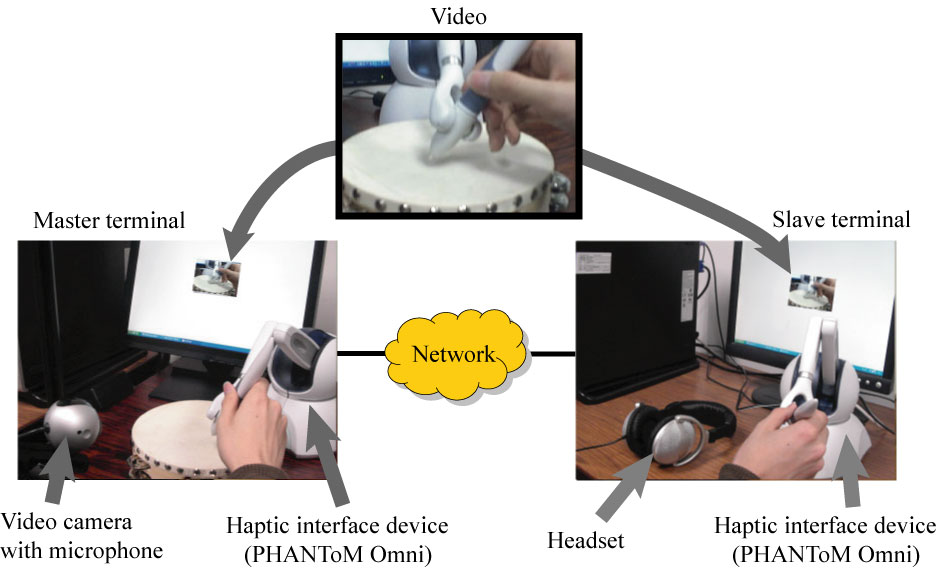

The following figure shows a remote haptic control system.

The system controls a haptic interface device at the slave terminal with another haptic interface device at the master terminal while watching video.

|

Haptic Media and Video Transfer System

|

The haptic media and video transfer system conveys the haptic sensation experienced by a user to a remote user. In the system, the user controls a haptic interface device with another remote haptic interface device while watching video (see the following figure). In the figure, the user is touching the Rubik's cube.

Haptic Media and video of a real object which the user is touching are transmitted

to another user. We handle the case in which a user of the master terminal touches

the object located at the master terminal by using haptic interface device while watching video,

and the case in which a user if the slave terminal touches the object located at

the master terminal while watching video. In the former, the haptic media are transferred

from the master terminal to the slave terminal; in the latter, the haptic media

are transmitted in both directions between the master and slave terminals.

Therefore, the interactivity is more important in the latter than in the former.

In addition, by adding sound to the system, we construct the haptic media, sound and video transfer system (see the following figure), and we investigate the influence of the network latency on the media output quality. The figure shows that a user of the slave terminal beats a tambourine.

In the next step of our research, we plan to study a transmission method of haptic sensation of higher quality. |

Olfactory and Haptic Media Transfer System

|

The olfactory and haptic media transfer system enables multiple users to share a work space via a network by using haptic interface devices and olfactory displays (SyP@D2). As such systems, we deal with an olfactory and haptic media display system and a remote ikebana system.

When we transmit olfactory and haptic media through a network, inter-stream synchronization errors may occur owing to the network delay and jitter. We clarify the influence of inter-stream synchronization error between small and reaction force (i.e., between olfactory and haptic media) by using the system. We also make a fruit harvesting game by enhancing the system and study QoS control to solve the problems cause by the network delay, delay jitter, and packet loss.

The fragrance of a flower is assumed to reach to locations which are within a constant distance from the corolla of the flower. That is, we can perceive the fragrance of the flower in a sphere (called the smell space) as shown in the following figure. When the viewpoint of the teacher or student enters the smell space of a flower, he/she can perceive the fragrance of the flower.

We proposed dynamic control of output timing of fragrance as QoS control to achieve remote ikebana with high realistic sensation. |

Networked Real-time Games

|

As networked games, we handle a racing game, a shooting game, and so on. In the networked racing game, players compete with each other by steering their own cars. In networked shooting game, two payers fight with each other. Each player fires shots at the other player's fighter while moving his/her fighter to the right or to the left, and he/she avoids shots fired by the other player by shielding his/her own fighter under buildings. When a shot hits a fighter, the fighter is blown up; when it hits a building, the building is damaged.

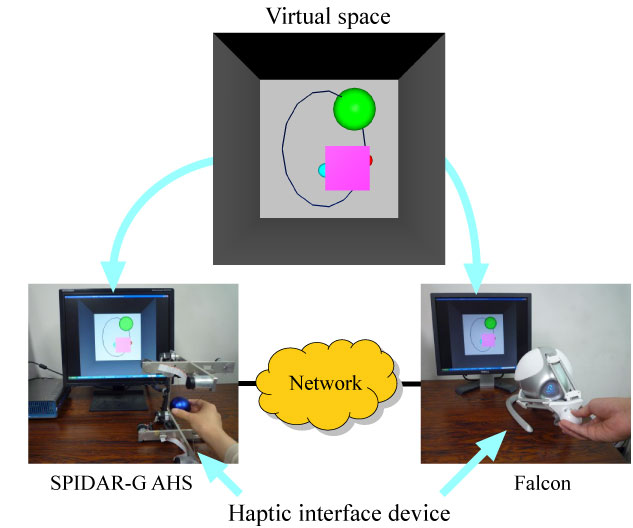

We handle a networked real-time game in which two players operate their own objects competitively by manipulating haptic interface devices as shown in the following figure. Each player lifts and moves his/her object (a rigid cube) so that the object contains the target (a sphere) in a 3-D virtual space. When the target is contained by either of the two objects, it disappears and then appears at a randomly-selected position in the space. We need to keep the causality, the consistency, and the fairness between the players in the game.

We also deal with a networked haptic game with collaborative work. As shown in the following figure, two groups (groups a and b) each of which consists of two players play a networked haptic game in which the two players in each group move their object collaboratively by putting the object between the two cursors of the haptic interface devices in a 3-D virtual space. When the target is contained by either of the two objects, it disappears and then appears at a randomly-selected position in the space. Since we handle both collaborative work and competitive work together, we need to maintain the efficiency and fairness of the game.

Furthermore, we handle a fruit harvesting game by enhancing an olfactory and haptic media display system. |

Remote Robot System with Force Feedback

|

We study QoS control and stabilization control for a remote robot system with force feedback in which a user operates a remote industrial robot having a force sensor by using a haptic interface device while watching video in order to achieve a high-quality and high-stable system.

We deal with several types of work in which we write a small character and push balls with different kinds of softness, and we study QoS control for accurate transfer of pen pressure and softness when pushing each ball even when the network delay is large. We try to achieve high precision control so as to pass a thread through the eye of a needle.

We also handle cooperative work such as carrying an object together and hand delivery of an object between two remote robot systems to study spatiotemporal synchronization control (control which moves robots at the same height, angle, and timing). We further suppose that we operate movable robots, and we achieve hand delivery which moving robot arms. It is important to avoid large force applied to an object so as not to break the object.

As the next step of our research, we plan to operate movable robots remotely. |

| In addition, our laboratory carried out the following researches. |

Networked Haptic Museum

|

In a networked haptic museum, which is a distributed virtual museum with touchable exhibits, an avatar explains exhibited objects (e.g., an Egyptian mummy sculpture, a painting of sunflowers by Vincent Van Gogh, and a dinosaur sleleton and one of its fangs) with voice and video while manipulating the objects by using a haptic interface device as shown in the following figure. Multiple users touch the objects while watching/listening to the avatar's presentation and ask him/her questions. Each user lifts and moves an exhibited object in order to feel the object's weight. The avatar explains the part of the the object which a user is touching through a haptic interface device.

|

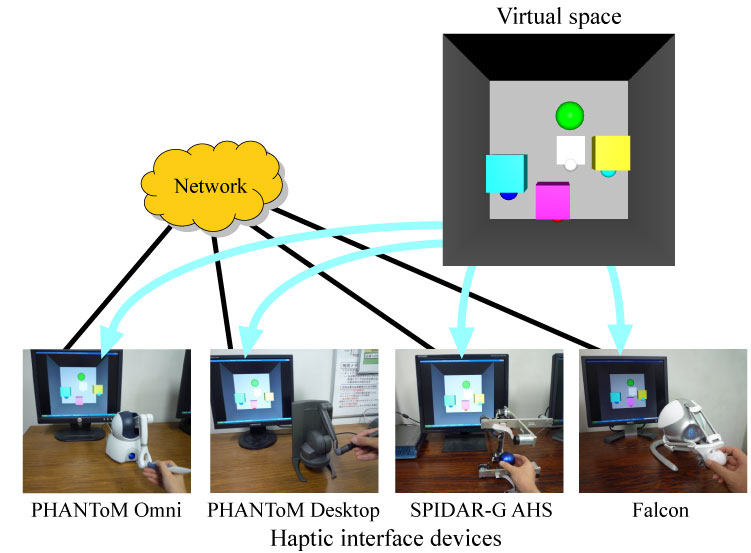

Interconnection among Heterogeneous Haptic Interface Devices

|

In the above applications, multiple media streams are temporally related to

each other.

Also, the requirements for the interactivity is very stringent;

that is, the applications need small network delays. |